Luigi Cheng

Data Scientist

I’m a Data Engineer and Analyst with hands-on experience designing ETL pipelines, building dashboards, and delivering insights that drive business outcomes. I currently support a 20-location restaurant group, where I manage everything from data infrastructure and A/B testing to reporting systems that track performance across branches. Before that, I worked remotely with international manufacturing clients, helping them clean complex supply chain data and develop predictive models. Along the way, I’ve built a solid foundation in Python, SQL, and cloud workflows. I’m continuing to grow through my Master’s in Data Science at UC San Diego.

Interested in collaborating or just sharing some insights? Feel free to reach out at luigi@luigidata.com

Analyzing

Across restaurant operations and supply chains, I’ve worked with structured and unstructured data to uncover insights that drive real-world action. From CSVs and sensors to A/B tests, I enjoy cleaning complex datasets and building visualizations that tell clear stories.

Developing

I build tools that transform raw data into usable systems. This includes automated ETL pipelines SQL dashboards and forecasting models. My recent work includes deploying ML-based anomaly detectors and real-time analytics tools using Python and cloud services.

Communicating

Data is only valuable when it’s understood. I regularly share results with stakeholders from kitchen staff to executive teams tailoring each story to the audience. Whether it’s a written report or a visual walkthrough I focus on clarity context and impact.

Fun facts about me

- I love reading manga. My favorites are One Piece and Mob Psycho 100

- I watch and play basketball. Klay Thompson is my go-to player

- I’m a mega foodie, especially when it comes to Italian and Japanese cuisine

- Born in Italy, family roots in China, now in America… how did that happen? God knows!

Featured Projects

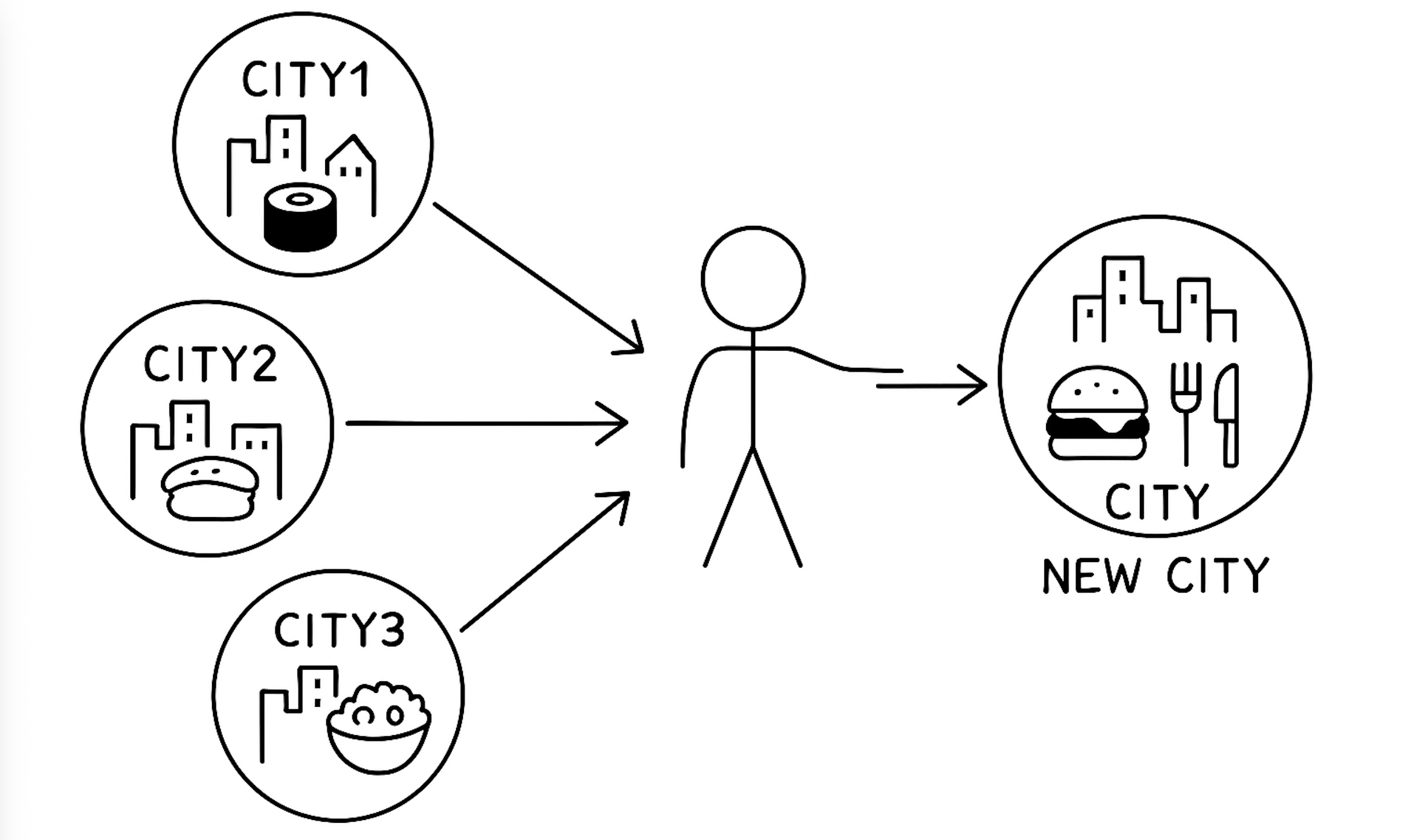

Restaurant Recommendation System

Moved to a new city and unsure where to eat? This system uses your past favorites to suggest restaurants you’re likely to love in your new location. Built with deep learning models, Streamlit for the front end, and integrated API pipelines, this project combines collaborative and content-based filtering to match your taste with top local spots using Yelp and Google Places.

Financial Market Predictive Models & Series

Can machine learning predict the stock market? This multi-part series explores that challenge using historical S&P 500 data, technical indicators, and engineered features. From logistic regression to XGBoost, the project tests multiple models with time-aware validation and class rebalancing. Later stages include ensemble modeling, trading strategy design, backtesting, and deployment via an interactive Streamlit app.

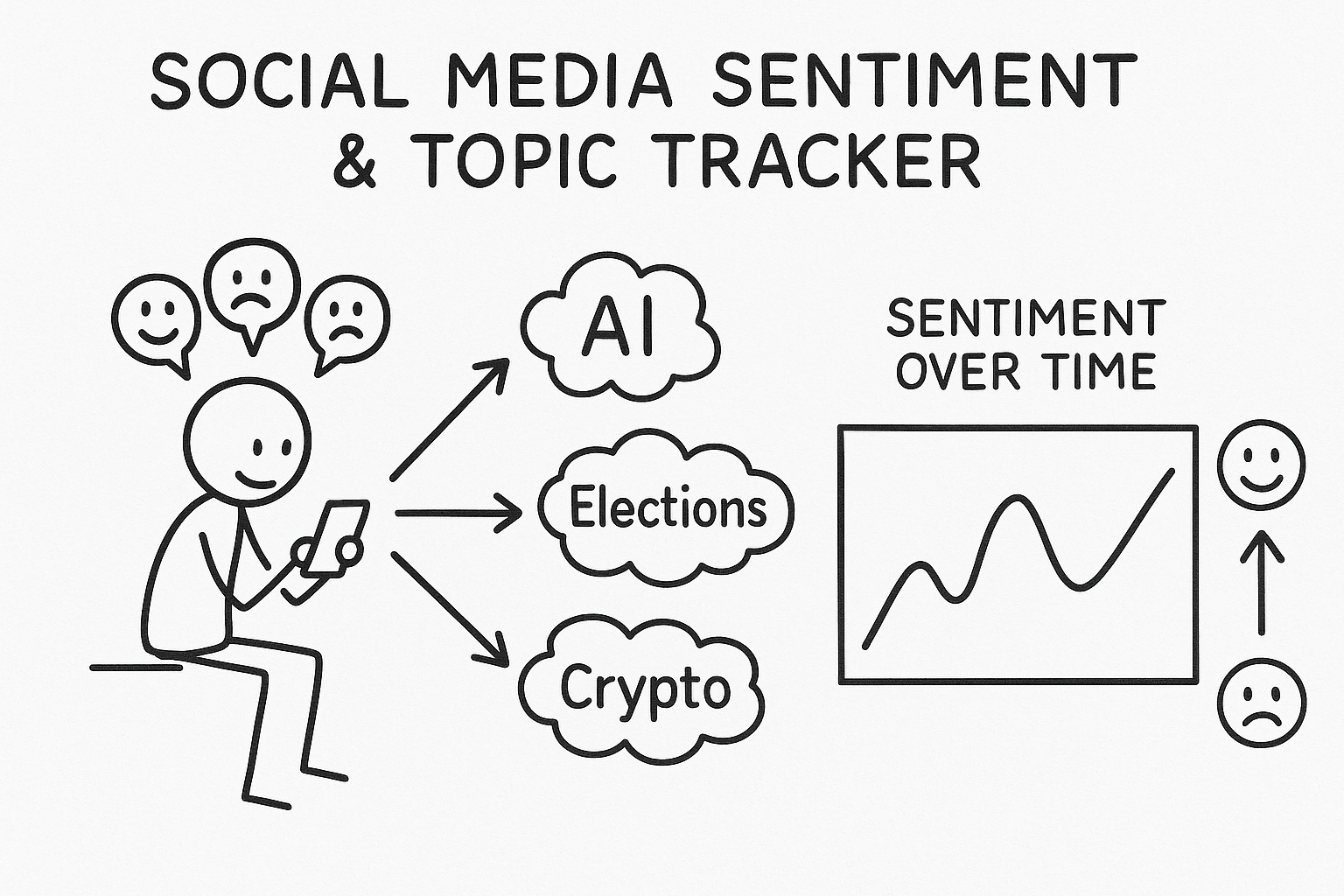

Social Media Sentiment & Topic Tracker

Ever wonder how public sentiment shifts in real time? This project collects Reddit and Twitter data to analyze emotional tone and trending topics using NLP pipelines. Built with Transformers, sentiment analysis models, and topic modeling techniques like BERTopic and LDA, it visualizes how online conversations evolve. The end product includes a modular pipeline, Docker setup, and Streamlit interface for live exploration.

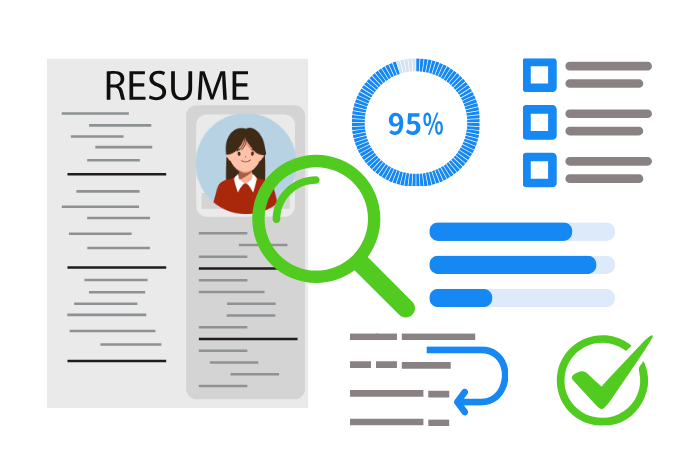

NLP Resume Matcher

Applying for jobs can feel like a black box. This project builds a resume–job matching engine using NLP and transformer models. By parsing job descriptions and resumes with spaCy and Hugging Face, it computes relevance scores and highlights skill gaps. Designed to help applicants understand fit and improve targeting, with a clean Streamlit front end for interactivity.